| MPII Human Pose Dataset |  |

Team

|

|

|

|

| Mykhaylo Andriluka |

Leonid Pishchulin |

Peter Gehler |

Bernt Schiele |

If you have any questions, please directly contact leonid at mpi-inf.mpg.de

References

-

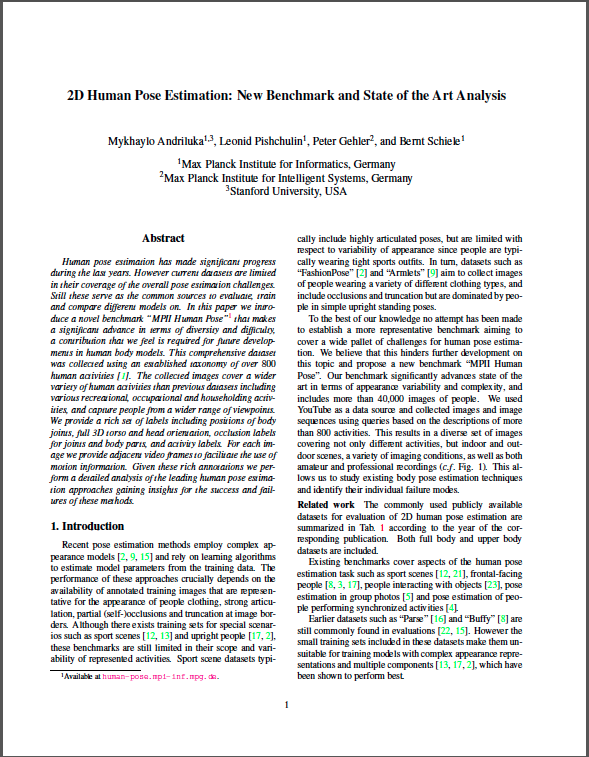

2D Human Pose Estimation: New Benchmark and State of the Art Analysis.

Mykhaylo Andriluka, Leonid Pishchulin, Peter Gehler and Bernt Schiele.

IEEE CVPR'14

-

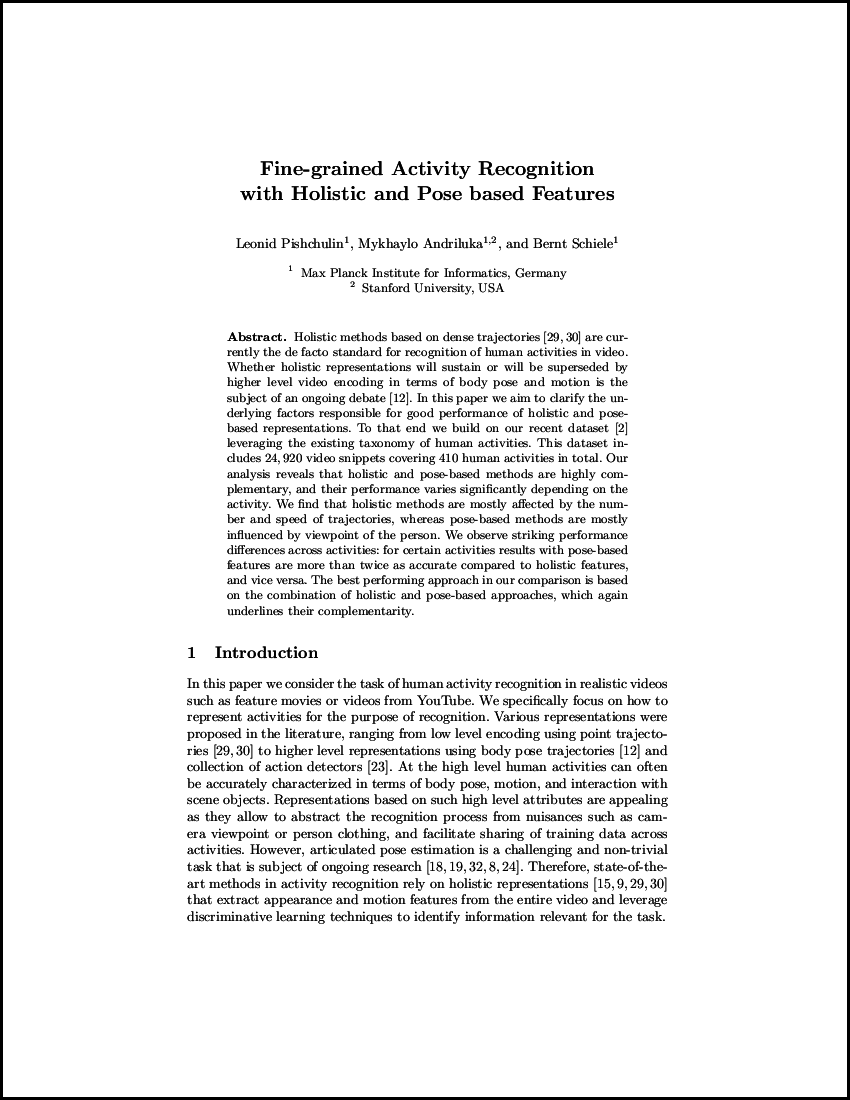

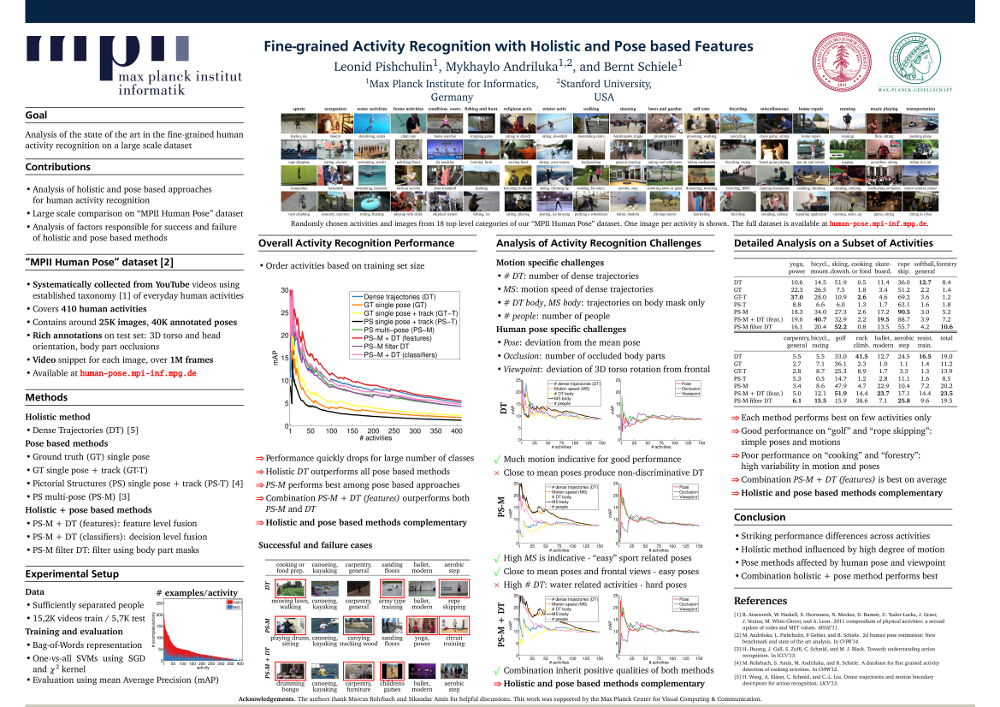

Fine-grained Activity Recognition with Holistic and Pose based Features.

Leonid Pishchulin, Mykhaylo Andriluka and Bernt Schiele.

GCPR'14

Introduction

MPII Human Pose dataset is a state of the art benchmark for evaluation of articulated human pose estimation. The dataset includes around 25K images containing over 40K people with annotated body joints. The images were systematically collected using an established taxonomy of every day human activities. Overall the dataset covers 410 human activities and each image is provided with an activity label. Each image was extracted from a YouTube video and provided with preceding and following un-annotated frames. In addition, for the test set we obtained richer annotations including body part occlusions and 3D torso and head orientations.

Following the best practices for the performance evaluation benchmarks in the literature we withhold the test annotations to prevent overfitting and tuning on the test set. We are working on an automatic evaluation server and performance analysis tools based on rich test set annotations.

Citing the dataset

@inproceedings{andriluka14cvpr,

author = {Mykhaylo Andriluka and Leonid Pishchulin and Peter Gehler and Schiele, Bernt}

title = {2D Human Pose Estimation: New Benchmark and State of the Art Analysis},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2014},

month = {June}

}Publications

|

|

|

|

|||

| CVPR'14 paper | CVPR'14 poster | GCPR'14 paper | GCPR'14 poster |

*Red boxes denote training samples

Download

Copyright 2015 Max Planck Institute for Informatics

Licensed under the Simplified BSD License [see bsd.txt]

We are making the annotations and the corresponding code freely available for research purposes. Commercial use is not allowed due to the fact that the authors do not have the copyright for the images themselves.

You can download the images and annotations from the MPII Human Pose benchmark here:

Below you can download short videos including preceding and following frames for each image. The videos are split for download into 25 batches ~17 GB each:

Batch 1 Batch 2 Batch 3 Batch 4 Batch 5

Batch 6 Batch 7 Batch 8 Batch 9 Batch 10

Batch 11 Batch 12 Batch 13 Batch 14 Batch 15

Batch 16 Batch 17 Batch 18 Batch 19 Batch 20

Batch 21 Batch 22 Batch 23 Batch 24 Batch 25

Image - video mapping (239 KB)

Annotation description

Annotations are stored in a matlab structure RELEASE having following fields

-

.annolist(imgidx)- annotations for imageimgidx.image.name- image filename.annorect(ridx)- body annotations for a personridx.x1, .y1, .x2, .y2- coordinates of the head rectangle.scale- person scale w.r.t. 200 px height.objpos- rough human position in the image.annopoints.point- person-centric body joint annotations.x, .y- coordinates of a jointid- joint id (0 - r ankle, 1 - r knee, 2 - r hip, 3 - l hip, 4 - l knee, 5 - l ankle, 6 - pelvis, 7 - thorax, 8 - upper neck, 9 - head top, 10 - r wrist, 11 - r elbow, 12 - r shoulder, 13 - l shoulder, 14 - l elbow, 15 - l wrist)is_visible- joint visibility

.vidx- video index invideo_list.frame_sec- image position in video, in seconds

-

img_train(imgidx)- training/testing image assignment -

single_person(imgidx)- contains rectangle idridxof sufficiently separated individuals -

act(imgidx)- activity/category label for imageimgidxact_name- activity namecat_name- category nameact_id- activity id

-

video_list(videoidx)- specifies video id as is provided by YouTube. To watch video on youtube go to https://www.youtube.com/watch?v=video_list(videoidx)

Evaluation on MPII Human Pose Dataset

This README provides instructions on how to prepare your predictions using MATLAB for evaluation on MPII HumanPose Dataset. Predictions should emailed to [eldar at mpi-inf.mpg.de].

At most four submissions for the same approach are allowed. Any two submissions must be 72 hours apart.

Preliminaries

Download evaluation toolkit.

Multi-Person Pose Estimation

Evaluation protocol

- Evaluation is performed on groups of multiple people. One image may contain multiple groups.

- Each group is localized by computing

x1, y1, x2, y2group boundaries from locations of all people in the group and cropping around those boundaries.pos = zeros(length(rect),2); for ridx = 1:length(rect) pos(ridx,:) = [rect(ridx).objpos.x rect(ridx).objpos.y]; end x1 = min(pos(:,1)); y1 = min(pos(:,2)); x2 = max(pos(:,1)); y2 = max(pos(:,2)); - Scale of each group is computed as an average scale of all people in the group.

scale = zeros(length(rect),2); for ridx = 1:length(rect) scale(ridx) = rect(ridx).scale; end scaleGroup = mean(scale); - Using ground truth number of people is not allowed.

- Using approximate location of each person while estimating person's pose is not allowed.

- Using individual scale of each person while estimating person's pose is not allowed.

Preparing predictions

- Extract testing annotation list structure from the entire annotation list

annolist_test = RELEASE.annolist(RELEASE.img_train == 0); - Extract groups of people using

getMultiPersonGroups.mfunction from evaluation toolkitload('groups_v12.mat','groups'); [imgidxs_multi_test,rectidxs_multi_test] = getMultiPersonGroups(groups,RELEASE,false);where

imgidxs_multi_testare image IDs containing groups andrectidxs_multi_testare rectangle IDs of people in each group. - Split testing images into groups

pred = annolist_test(imgidxs_multi_test); - Set predicted

x_pred, y_predcoordinates in the original image, 0-based jointid(see 'annotation description') and predictionscorefor each body jointpred(imgidx).annorect(ridx).annopoints.point(pidx).x = x_pred; pred(imgidx).annorect(ridx).annopoints.point(pidx).y = y_pred; pred(imgidx).annorect(ridx).annopoints.point(pidx).id = id; pred(imgidx).annorect(ridx).annopoints.point(pidx).score = score; - Save predictions into

pred_keypoints_mpii_multi.matsave('pred_keypoints_mpii_multi.mat','pred');

Evaluation Script

Evaluation is performed by using evaluateAP.m function

Single Person Pose Estimation

Evaluation protocol

- Evaluation is performed on sufficiently separated people.

- Using approximate location and scale of each person is allowed

pos = [rect(ridx).objpos.x rect(ridx).objpos.y]; scale = rect(ridx).scale;

Preparing predictions

- Extract testing annotation list structure from the entire annotation list:

annolist_test = annolist(RELEASE.img_train == 0); - Extract image IDs and rectangle IDs of single persons

rectidxs = RELEASE.single_person(RELEASE.img_train == 0); - Set predicted

x_pred, y_predcoordinates in the original image for each body joint of single persons and 0-based jointid(see 'annotation description')pred = annolist_test; pred(imgidx).annorect(ridx).annopoints.point(pidx).x = x_pred; pred(imgidx).annorect(ridx).annopoints.point(pidx).y = y_pred; pred(imgidx).annorect(ridx).annopoints.point(pidx).id = id; - Save predictions into

pred_keypoints_mpii.matsave('pred_keypoints_mpii.mat','pred');

Evaluation Script

Evaluation is performed by using evaluatePCKh.m function

Evaluation toolkit

Single Person

Evaluation is performed on sufficiently separated people only ("Single Person" subset).

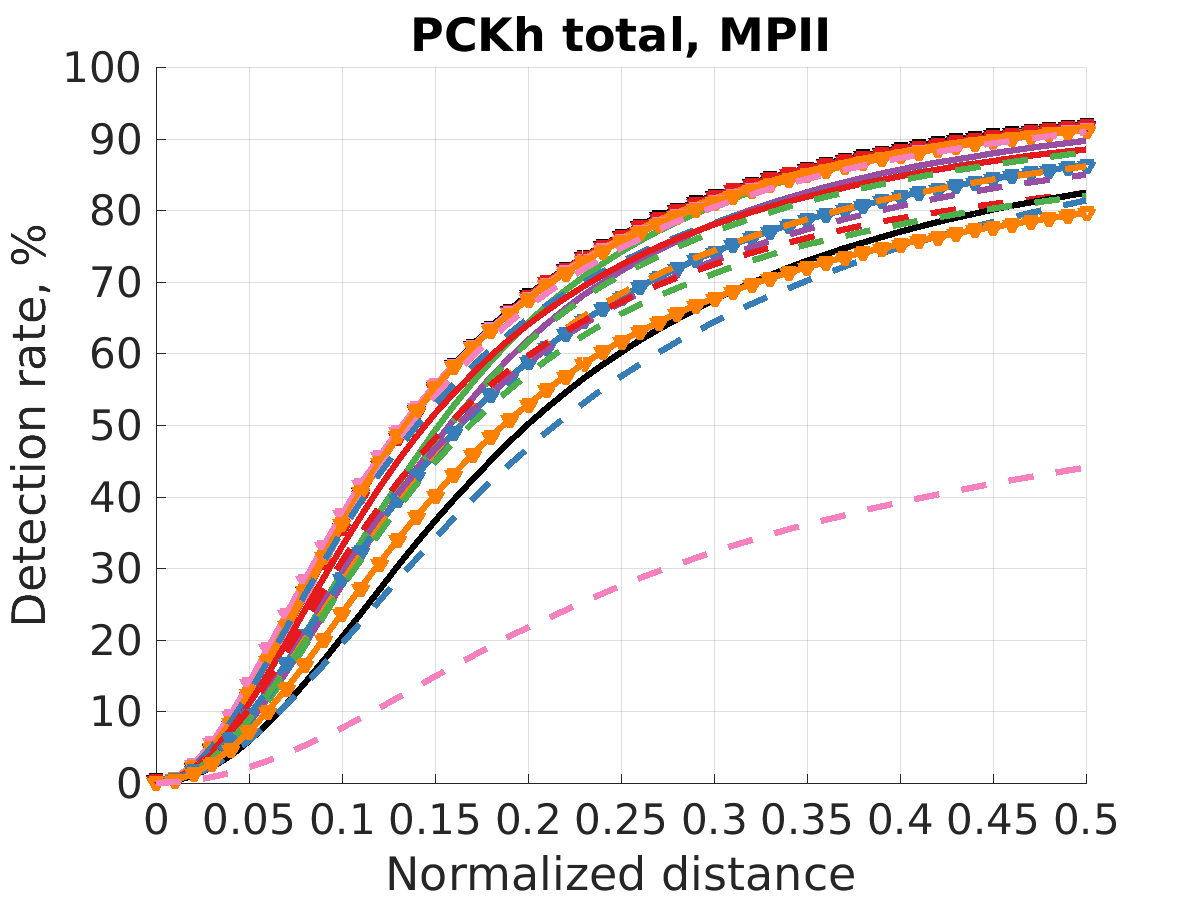

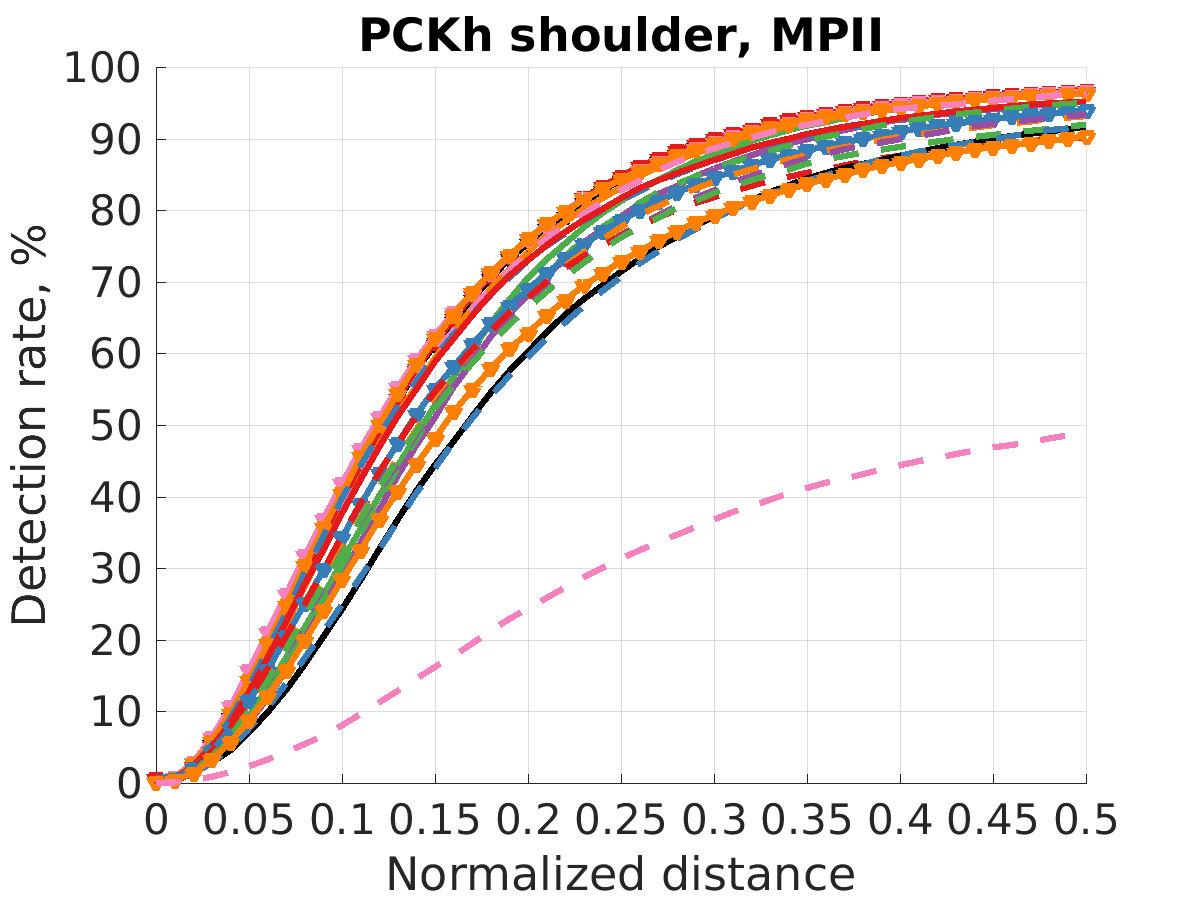

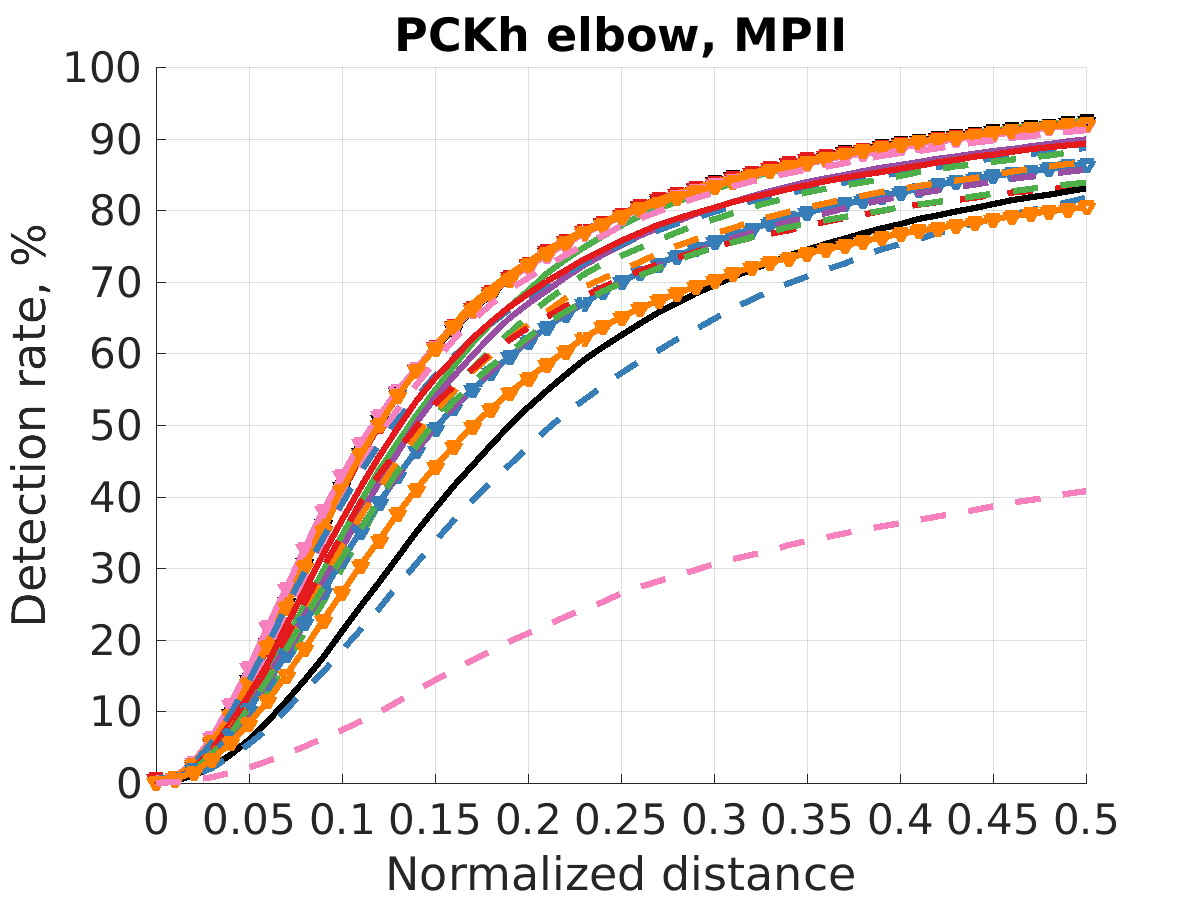

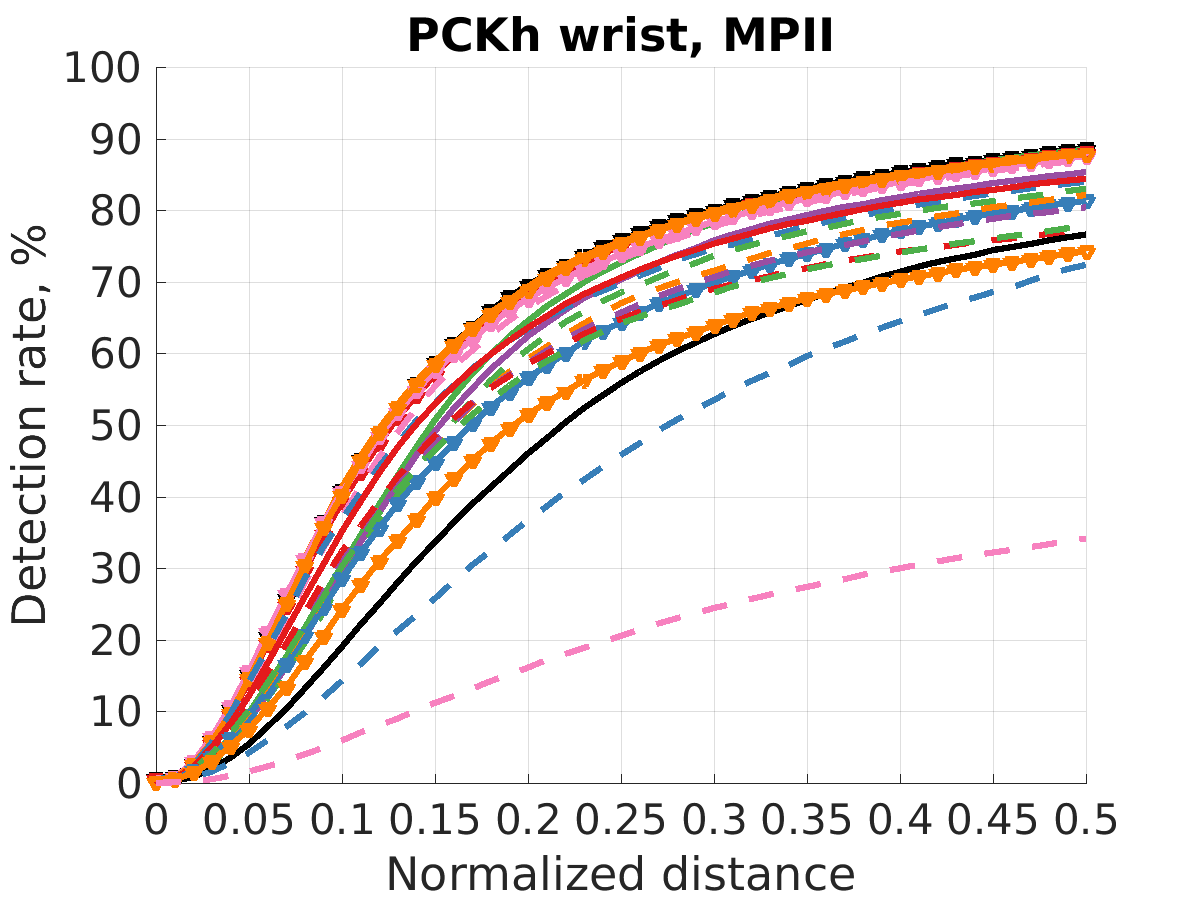

Overall performance

PCKh evaluation measure

PCKh: PCK measure that uses the matching threshold as 50% of the head segment length.

PCKh @ 0.5

| Method | Head | Shoulder | Elbow | Wrist | Hip | Knee | Ankle | PCKh |

|---|---|---|---|---|---|---|---|---|

| Pishchulin et al., ICCV'13 | 74.3 | 49.0 | 40.8 | 34.1 | 36.5 | 34.4 | 35.2 | 44.1 |

| Tompson et al., NIPS'14 | 95.8 | 90.3 | 80.5 | 74.3 | 77.6 | 69.7 | 62.8 | 79.6 |

| Carreira et al., CVPR'16 | 95.7 | 91.7 | 81.7 | 72.4 | 82.8 | 73.2 | 66.4 | 81.3 |

| Tompson et al., CVPR'15 | 96.1 | 91.9 | 83.9 | 77.8 | 80.9 | 72.3 | 64.8 | 82.0 |

| Hu&Ramanan., CVPR'16 | 95.0 | 91.6 | 83.0 | 76.6 | 81.9 | 74.5 | 69.5 | 82.4 |

| Pishchulin et al., CVPR'16* | 94.1 | 90.2 | 83.4 | 77.3 | 82.6 | 75.7 | 68.6 | 82.4 |

| Lifshitz et al., ECCV'16 | 97.8 | 93.3 | 85.7 | 80.4 | 85.3 | 76.6 | 70.2 | 85.0 |

| Gkioxary et al., ECCV'16 | 96.2 | 93.1 | 86.7 | 82.1 | 85.2 | 81.4 | 74.1 | 86.1 |

| Rafi et al., BMVC'16 | 97.2 | 93.9 | 86.4 | 81.3 | 86.8 | 80.6 | 73.4 | 86.3 |

| Belagiannis&Zisserman, FG'17** | 97.7 | 95.0 | 88.2 | 83.0 | 87.9 | 82.6 | 78.4 | 88.1 |

| Insafutdinov et al., ECCV'16 | 96.8 | 95.2 | 89.3 | 84.4 | 88.4 | 83.4 | 78.0 | 88.5 |

| Wei et al., CVPR'16* | 97.8 | 95.0 | 88.7 | 84.0 | 88.4 | 82.8 | 79.4 | 88.5 |

| Bulat&Tzimiropoulos, ECCV'16 | 97.9 | 95.1 | 89.9 | 85.3 | 89.4 | 85.7 | 81.7 | 89.7 |

| Newell et al., ECCV'16 | 98.2 | 96.3 | 91.2 | 87.1 | 90.1 | 87.4 | 83.6 | 90.9 |

| Tang et al., ECCV'18 | 97.4 | 96.4 | 92.1 | 87.7 | 90.2 | 87.7 | 84.3 | 91.2 |

| Ning et al., TMM'17 | 98.1 | 96.3 | 92.2 | 87.8 | 90.6 | 87.6 | 82.7 | 91.2 |

| Luvizon et al., arXiv'17 | 98.1 | 96.6 | 92.0 | 87.5 | 90.6 | 88.0 | 82.7 | 91.2 |

| Chu et al., CVPR'17 | 98.5 | 96.3 | 91.9 | 88.1 | 90.6 | 88.0 | 85.0 | 91.5 |

| Chou et al., arXiv'17 | 98.2 | 96.8 | 92.2 | 88.0 | 91.3 | 89.1 | 84.9 | 91.8 |

| Chen et al., ICCV'17 | 98.1 | 96.5 | 92.5 | 88.5 | 90.2 | 89.6 | 86.0 | 91.9 |

| Yang et al., ICCV'17 | 98.5 | 96.7 | 92.5 | 88.7 | 91.1 | 88.6 | 86.0 | 92.0 |

| Ke et al., ECCV'18 | 98.5 | 96.8 | 92.7 | 88.4 | 90.6 | 89.3 | 86.3 | 92.1 |

| Tang et al., ECCV'18 | 98.4 | 96.9 | 92.6 | 88.7 | 91.8 | 89.4 | 86.2 | 92.3 |

| Zhang et al., arXiv'19 | 98.6 | 97.0 | 92.8 | 88.8 | 91.7 | 89.8 | 86.6 | 92.5 |

| Su et al., arXiv'19*** | 98.7 | 97.5 | 94.3 | 90.7 | 93.4 | 92.2 | 88.4 | 93.9 |

| Bulat et al., FG'2020*** | 98.8 | 97.5 | 94.4 | 91.2 | 93.2 | 92.2 | 89.3 | 94.1 |

* methods trained when adding LSP training and LSP extended sets to the MPII training set

** methods trained on MS COCO training and finetuned on MPII training set

*** methods trained on HSSK training and MPII training sets

|

|

|

|

|

|

|

|

|

Performance vs. complexity measures

|

|

|

|

|

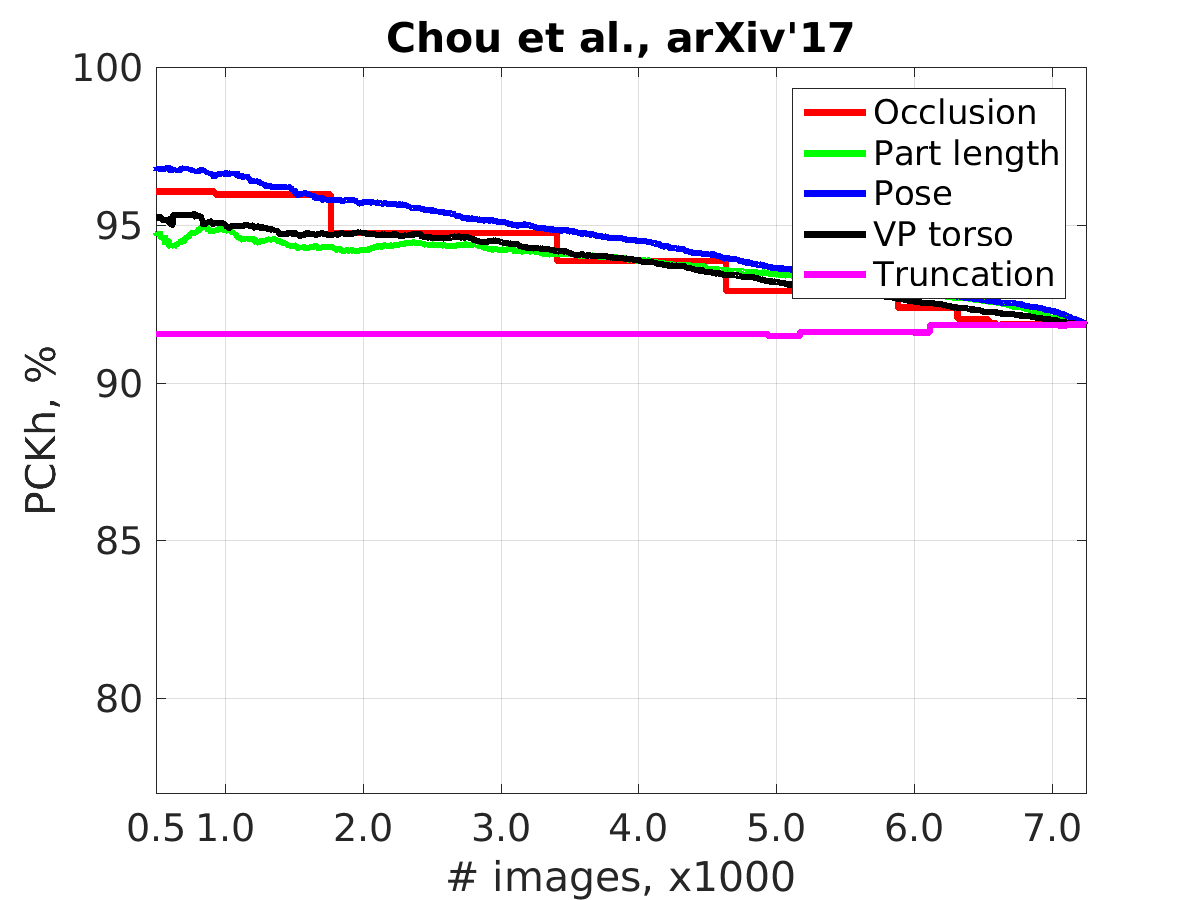

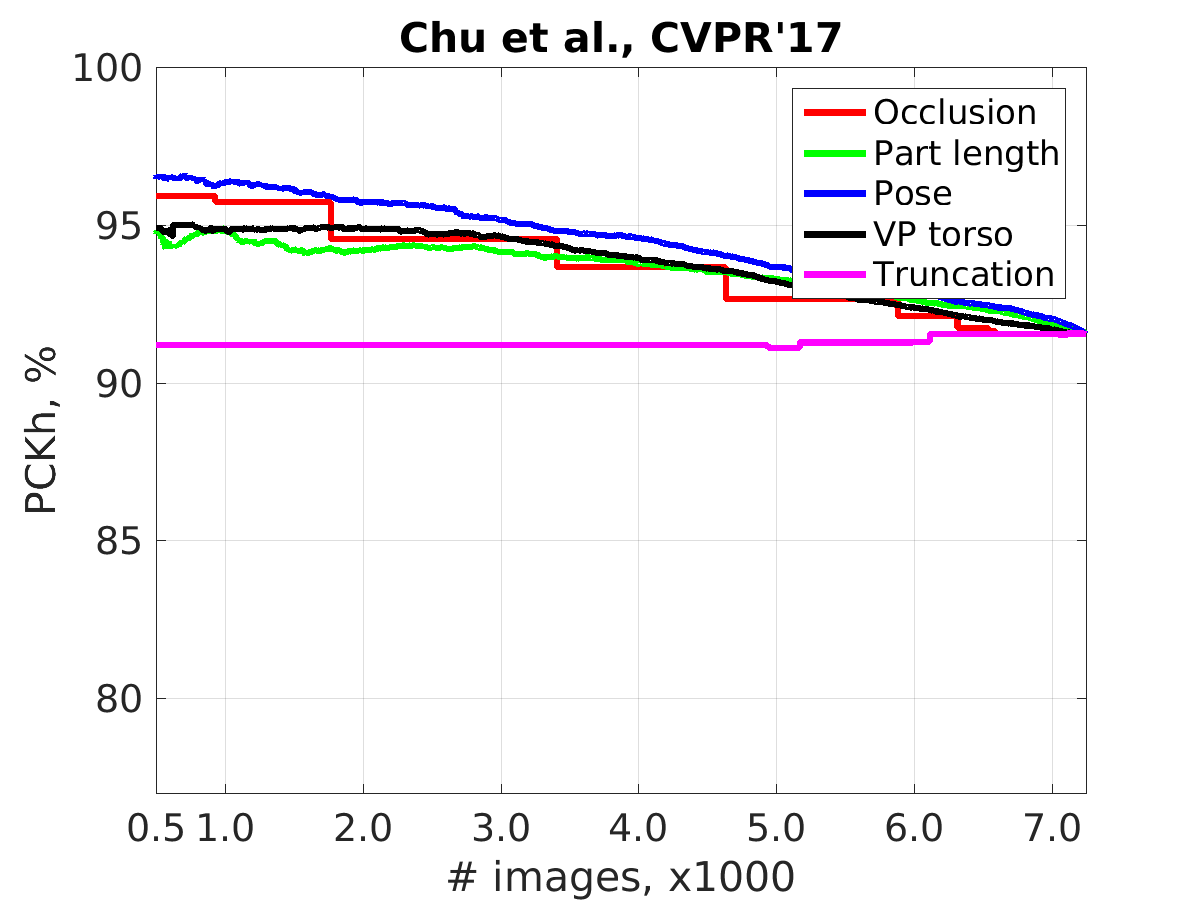

Performance by pose

|

|

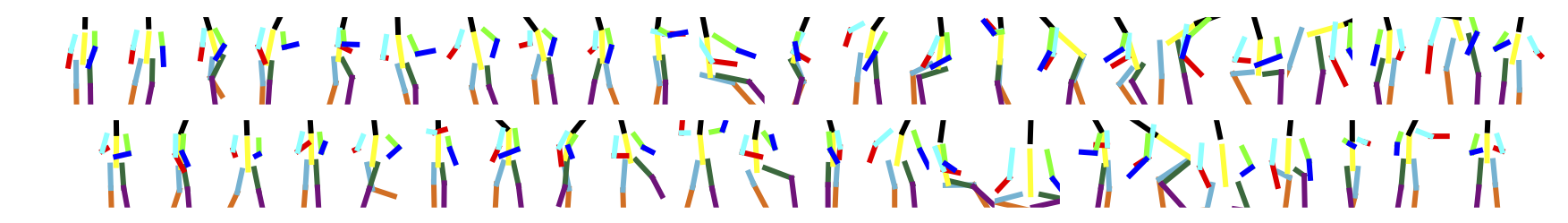

Shown are medoids of body pose clusters aranged according to pose complexity.

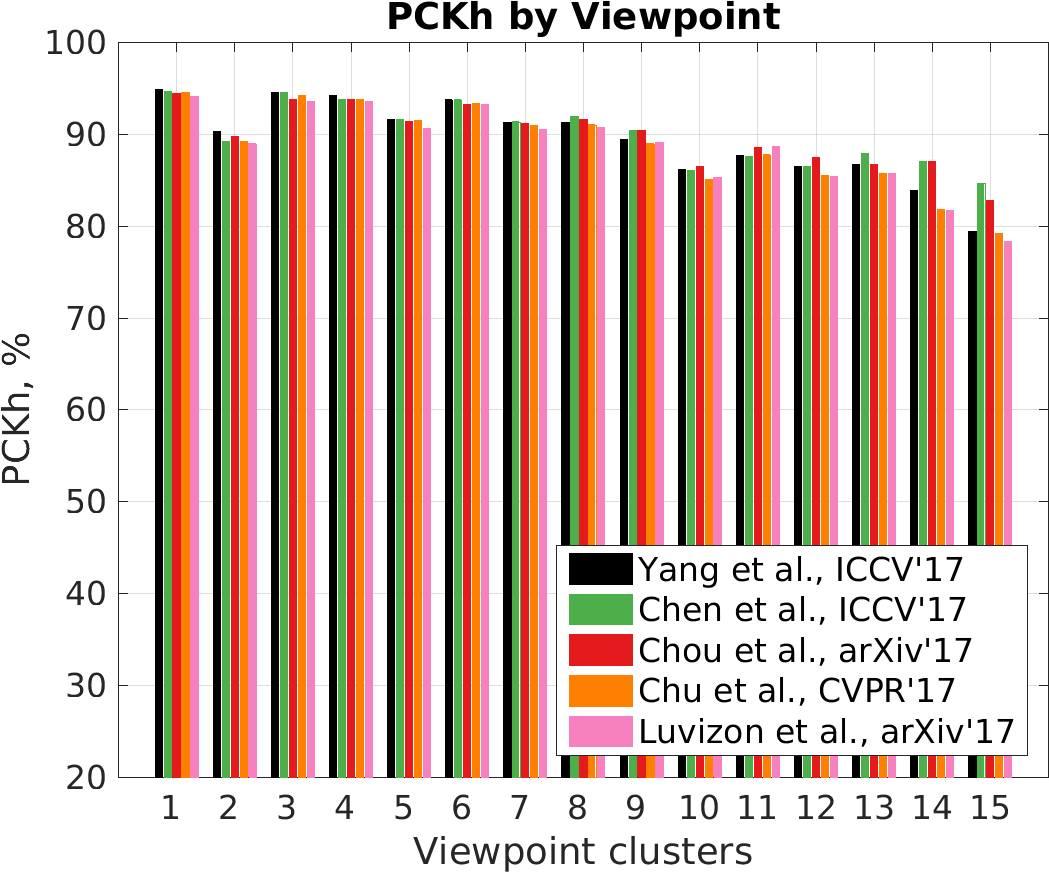

Performance by viewpoint and activity

|

|

|

Shown are medoids of 3D torso orientation clusters arranged according to the cluster size:

cluster 1 represents upright frontal torso,

cluster 2 represents slightly rotated upright backward facing torso,

cluster 6 represents torso bending towards the camera, etc.

Multi-Person

Evaluation is performed on groups of multiple people ("Multi-Person" subset).

mAP evaluation measure

Mean Average Precision (mAP) based evaluation of body joint predictions forming cosistent body pose configurations.

Performance on full set

mAP @ 0.5

| Method | Head | Shoulder | Elbow | Wrist | Hip | Knee | Ankle | mAP |

|---|---|---|---|---|---|---|---|---|

| Iqbal&Gall, ECCVw'16 | 58.4 | 53.9 | 44.5 | 35.0 | 42.2 | 36.7 | 31.1 | 43.1 |

| Insafutdinov et al., ECCV'16* | 78.4 | 72.5 | 60.2 | 51.0 | 57.2 | 52.0 | 45.4 | 59.5 |

| Insafutdinov et al., arXiv'16a* | 89.4 | 84.5 | 70.4 | 59.3 | 68.9 | 62.7 | 54.6 | 70.0 |

| Levinkov et al., CVPR'17 | 89.8 | 85.2 | 71.8 | 59.6 | 71.1 | 63.0 | 53.5 | 70.6 |

| Varadarajan et al., arXiv'17 | 92.1 | 85.9 | 72.9 | 61.7 | 72.0 | 64.6 | 56.6 | 72.2 |

| Insafutdinov et al., CVPR'17 | 88.8 | 87.0 | 75.9 | 64.9 | 74.2 | 68.8 | 60.5 | 74.3 |

| Cao et al., CVPR'17 | 91.2 | 87.6 | 77.7 | 66.8 | 75.4 | 68.9 | 61.7 | 75.6 |

| Fang et al., arXiv'16 | 88.4 | 86.5 | 78.6 | 70.4 | 74.4 | 73.0 | 65.8 | 76.7 |

| Newell et al., NIPS'17 | 92.1 | 89.3 | 78.9 | 69.8 | 76.2 | 71.6 | 64.7 | 77.5 |

| Fieraru et al., CVPRw'18 | 91.8 | 89.5 | 80.4 | 69.6 | 77.3 | 71.7 | 65.5 | 78.0 |

* methods trained when adding LSP training and LSP extended sets to the MPII training set

Performance on subset of 288 testing images

mAP @ 0.5

| Method | Head | Shoulder | Elbow | Wrist | Hip | Knee | Ankle | mAP |

|---|---|---|---|---|---|---|---|---|

| Pishchulin et al., CVPR'16* | 73.1 | 71.7 | 58.0 | 39.9 | 56.1 | 43.5 | 31.9 | 53.5 |

| Iqbal&Gall, ECCVw'16 | 70.0 | 65.2 | 56.4 | 46.1 | 52.7 | 47.9 | 44.5 | 54.7 |

| Insafutdinov et al., ECCV'16* | 87.9 | 84.0 | 71.9 | 63.9 | 68.8 | 63.8 | 58.1 | 71.2 |

| Newell&Deng, arXiv'16 | 91.5 | 87.2 | 75.9 | 65.4 | 72.2 | 67.0 | 62.1 | 74.5 |

| Insafutdinov et al., arXiv'16a* | 92.1 | 88.5 | 76.4 | 67.8 | 73.6 | 68.7 | 62.3 | 75.6 |

| Varadarajan et al., arXiv'17 | 92.9 | 88.8 | 77.7 | 67.8 | 74.6 | 67.0 | 63.8 | 76.1 |

| Cao et al., CVPR'17 | 92.9 | 91.3 | 82.3 | 72.6 | 76.0 | 70.9 | 66.8 | 79.0 |

| Fang et al., arXiv'16 | 89.3 | 88.1 | 80.7 | 75.5 | 73.7 | 76.7 | 70.0 | 79.1 |

| Insafutdinov et al., CVPR'17 | 92.2 | 91.3 | 80.8 | 71.4 | 79.1 | 72.6 | 67.8 | 79.3 |

* methods trained when adding LSP training and LSP extended sets to the MPII training set

Impressum | Datenschutz